While attending the Domain Specific Modeling workshop at OOPSLA 2008, I heard many pointed criticisms of UML. No one went into detail, so I bought a book on DSM by Steven Kelly and Juha-Pekka Tolvanen. The book was not cheap– over ninety bucks when tax was added. (Doh!) So far I’ve read the first four chapters, but they cover the problems of UML fairly well in those early sections and also outline the basic tenets of the DSM philosophy as well. I’ve synthesized the gist of their points below. All the good ideas about modeling architecture below are imperfect summaries of Kelly and Tolvanen’s work. The opinionated ideas about programming languages and tools are my own.

UML is applying an abstraction at the wrong end of the problem. It is primarily used to sketch object models for inferior languages. As such, it tends to explode into incomprehensible patterns of accidental complexity in order to accommodate the various “design patterns” that are used work around the lack of essential language features. Because the UML models cannot be compiled, executed, or interpreted, they are reduced to the level of mere documentation. As such, it is generally not even worth keeping in sync– the manual round trip from the code to the model and back is just too expensive for something that adds no more value to a project than an elaborate code comment. (Slides of elaborate UML diagrams, on the other hand, are nevertheless great for impressing the uninitiated in a presentation, of course– that goes without saying!) Efforts to “fix” UML tend to not to gain traction: the specification is itself too broad and coding environments and applications are themselves too diverse.

A modeling language needs to do three things on order to become useful:

First, it should map directly to domain problem concepts. UML is designed to map to coding architectures– and because of this it fails to raise the level of abstraction. The jump from assembler to C gave an order of magnitude increase in productivity because of its corresponding increase in abstraction. OOP languages and UML have not given the increase in productivity gains that they should have– in some cases they may even hurt productivity. Modelers should not even think about implementation details when they develop their language. Instead they need to focus on mapping their ideas directly to the domain concepts. (Note: this is not a new idea. Ableson and Sussman popularized this approach in their SICP lectures at MIT where they demonstrated how Scheme would allow them to define programs that called functions that didn’t even exist yet. They would start with the right solution… and then gradually build up until it would run. Michael Harrison talked about this when he described the ‘wishful thinking’ approach to programming from SICP.)

Second, the modeling language must be formalized. The modeling language must be a first-class citizen of the development process rather than just make-work for architects and project managers. It must be possible to generate useful executable code from the models made within the language. The language needs to add value not just in communicating with domain experts and helping them validate the “business logic”– it needs to add value at all levels of the development process. It needs to raise the level of abstraction for the code maintainers by allowing them to stop thinking about the underlying frameworks and libraries. It needs to contribute to testing efforts by eliminating the need for implementing certain classes of tests and also by providing a basis for generating other classes of tests automatically from the models. It needs to possible to generate documentation automatically from the models. The models should be useful in and of themselves and should be significantly useful to all development tasks downstream from them.

Finally, the modeling language should have first-class tooling support. The tools should not be thought of as IDE extensions for programmers. These tools are not “wizards” to generate ugly code or partial stubs for developers to flesh out. The tooling should stand alone for the domain experts; if they have to think about the code at all then the tools are developing in the wrong direction. Expert developers aware of the intricacies of the domain problem need to organize their frameworks and libraries in such a way that the models can be “compiled” into fully functioning code. The correct analogy for this is, again, to think in terms of compiling C to machine code. The C compilers are created by machine language experts. C programmers do not modify the compiled machine code– they use the results in practically all cases. The C programming environment allows the programmer to work at a higher level– without having to think in terms of the underlying machine code or hardware. C is, in effect, a modeling language for machine code.

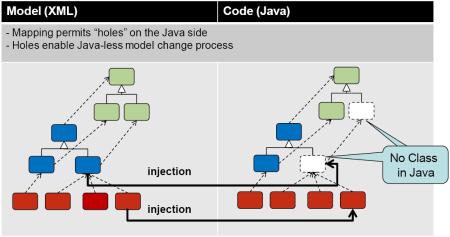

So, to get a modeling language that is actually useful, you have to go “Domain Specific” on both sides of the problem: the modeling language has to map directly to the problem domain and the generated code has to map directly to the target environment. There are two linguistic abstraction barriers that must be implemented in order to make this work: 1) the modeling language between the models and the generated code and 2) the framework between the generated code and the target libraries. You must build up from your core code components to the framework… and you must build down from the models to the generated code. If the code generation process is too complicated, you may need better abstractions at the framework level. If the code generation process is impossible, then the modeling language may not be providing a detailed enough description of the requirements. If there is too much repetition in the models, then the modeling language will need to be extended to cover additional concepts.

As Steven Kelly said in a 2006 article, “To make model-driven development work this way, you cannot use a general-purpose design language like UML and a modeling tool’s built-in code generator. The people that created UML did not design it for describing applications in your domain, or for generating code other than skeletons. Despite the efforts of many to make it suitable for generation, no one has ever, or will ever, make it happen in a way which is still smart and convenient. An application’s behavior and the rules it has to adhere to are domain-specific. To capture that effectively and completely in a graphical design, you need a Domain-Specific Modeling language.”

Update 10/30/08:

I have discovered some interesting ideas from the opposite point of view. Here is some remarks from Franco Civello— someone that has used UML successfully in model driven development:

“… having produced informal use cases to clarify requirements, and a domain model to get an initial understanding of the subject area, the analyst produces a precise specification model, in UML, in which the system to be developed is represented as an object, belonging to a type (note, not a class, as the system is an abstraction used to define visible behaviour, not a software entity to be directly implemented in e.g. Java).”

Notice the key similarity there with the Kelly/Tolvanen Tolvanen approach. Civello is not using UML to describe the code architecture– he is mapping the UML more toward the problem domain.

“Steps in the use case flows are then formalised as operations on the system type, with a declarative specification of behaviour based on the notion of functional contract, written as pre- and post-conditions expressed on an underlying model (the system type model, derived closely from the domain model).”

Again, this point reflects back to my second point: there must be a formalization of the modeling language at some point. Note also, we have (possibly) an analog to the declarative approaches that I saw in the ModelTalk presentation last week.

“UML static modelling gives you the language to represent the state of the system in abstract and yet precise terms. Just don’t think of your classes as software things with methods and member data, but as specification types that give you the vocabulary to specify the business outcome of a system operation. These types can have attributes, associations, queries, constraints and definitions, all powerful UML concepts, but no state-changing operations. The only state-changing operations are defined at the system level, and are not elaborated into message-based solutions, but just specified declaratively in terms of business logic, steering clear of any design decisions.”